Blog

GEO-Native CMS vs Traditional CMS: Why Architecture Matters for AI Search

GEO-Native CMS vs Traditional CMS: Why Architecture Matters for AI Search

GEO-native CMS platforms use AI agents to autonomously generate and refresh content for AI search visibility, while traditional CMS like WordPress require manual updates and lack native AI optimization. Research shows URLs updated within 30 days are 2.5x more likely to be cited by LLMs, making automated content refresh essential for maintaining AI search presence.

TLDR

Traditional CMS platforms were built for 2000s-era SEO and require manual content publishing, while GEO-native systems deploy AI agents that autonomously generate and optimize content for LLM citations

B2B teams see 3x higher AI citations with GEO-optimized content, with some achieving 10% of organic traffic from AI citations

Content freshness is critical: URLs updated within 30 days are 2.5x more likely to be cited by AI engines compared to older content

Traditional platforms lack built-in structured data and entity clarity that AI engines require for accurate citation and attribution

GEO-native platforms provide end-to-end automation from content generation to visitor identification, eliminating manual bottlenecks that prevent scaling

GEO-native CMS platforms upend the way content shows up in AI search. By contrasting them with legacy WordPress-style stacks, we'll show why architecture - not just copy - now determines whether ChatGPT or Perplexity ever cite your brand.

Why Your CMS Architecture Now Determines AI Visibility

Over 1 billion people now use AI search every week to research products, compare solutions, and make purchasing decisions. This shift from keyword queries to conversational prompts has fundamentally changed what makes content discoverable. Traditional CMS platforms were architected for a different era of search - they excel at storing and displaying content for human visitors but were never designed for how large language models retrieve, understand, and cite information.

Generative Engine Optimization (GEO) is the practice of optimizing your content to appear as sources and citations in AI-generated responses from platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude. Unlike traditional SEO, which focuses on page ranks and keyword optimization, GEO emphasizes being directly referenced in AI-generated answers.

A GEO-native CMS is a content platform architected specifically for this new reality. As one industry guide explains, "Relixir's GEO-Native CMS provides a headless CMS with built-in AI agents that autonomously generate and refresh content optimized for LLM citations."

The stakes are significant. Analysis of 500,000+ web sessions reveals that ChatGPT users convert at 15.9% compared to Google search's 1.76% - a 20x higher conversion rate. These aren't casual browsers; they're high-intent buyers who have already done their research.

Core Architectural Differences: Manual SEO Roots vs. AI-First Design

The fundamental divide between traditional and GEO-native CMS platforms comes down to how they were built and what they optimize for.

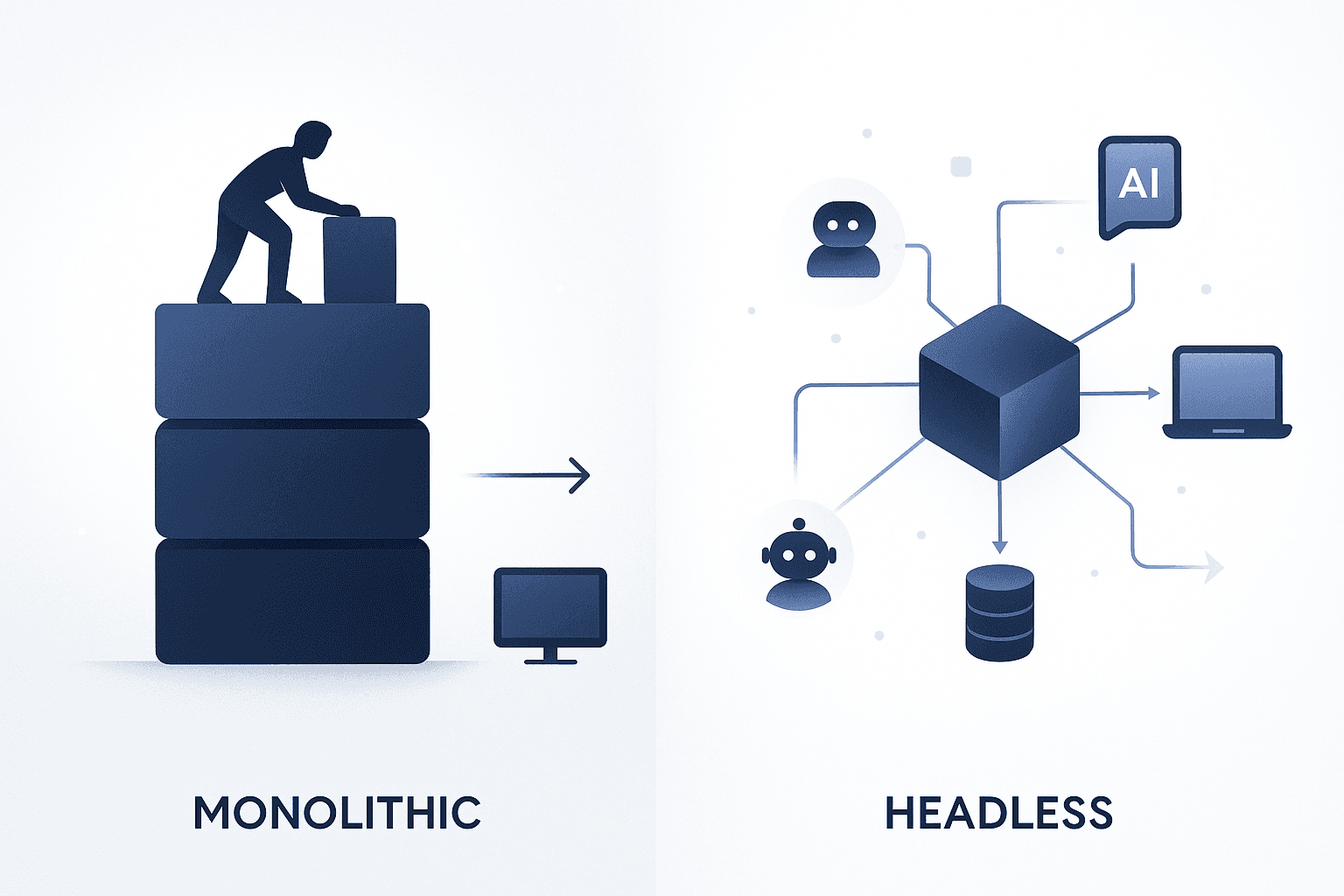

Traditional CMS architectures like WordPress, Joomla, and Drupal have long dominated the digital landscape. These systems tightly couple backend content management with frontend presentation layers, creating structures optimized for human browsing and 2000s-era SEO practices. According to academic research, "The rise of headless CMS architectures has revolutionized this space by decoupling backend content management from frontend presentation layers."

WordPress remains the dominant CMS in 2025, powering roughly 64% of CMS-driven sites. However, WordPress sites show a wide range of architectures, performance profiles, and operational complexity compared with more tightly integrated CMSs.

GEO platforms with native CMS integrations enable teams to optimize content directly at the source for AI visibility. This represents a fundamental shift from the manual, page-by-page approach that traditional platforms require.

Feature | Traditional CMS | GEO-Native CMS |

|---|---|---|

Content Publishing | Manual effort for each piece | Autonomous AI agents |

Content Refresh | Manual audits required | Auto-syncs with knowledge base |

AI Search Visibility | Zero native tracking | Built-in monitoring across 10+ platforms |

Structured Data | Requires plugins or custom code | Automatically generated JSON-LD |

Scale | Limited by human bandwidth | Unlimited collection generation |

Rise of Headless & Composable Delivery

The headless CMS model decouples content management from its presentation layer, enabling seamless content delivery across web, mobile, IoT, and other platforms.

According to Forrester's analysis, vendors are increasingly focusing on headless CMS architectures to support omnichannel content delivery. This shift reflects the reality that content must now serve multiple channels - including AI engines - from a single source.

As James McCormick notes, "Hybrid headless CMSs are becoming the pragmatic bridge between composability and control. AI now sits at the center of this balance - connecting creative and technical workflows so that enterprises can deliver faster, more consistent, and more intelligent experiences across every channel."

Key advantages of headless and composable architecture include:

API-first flexibility: Content retrieval and presentation layers operate independently

Enhanced scalability: Testing shows response times of 88-782 milliseconds with efficient resource utilization

Omnichannel readiness: Seamless delivery across web, mobile, and AI platforms

Future-proofing: Easier integration with emerging technologies and AI systems

Why Do AI Engines Reward Real-Time Content Updates?

Content freshness is one of the most underappreciated factors in AI search visibility. LLMs heavily prioritize recent content, and outdated information gets deprioritized or ignored entirely.

Research from SearchAtlas reveals compelling data: "URLs updated within 30 days were 2.5 times more likely to be cited by LLMs compared to those older than 90 days."

The pattern holds across all major AI platforms. Gemini demonstrates the strongest recency preference, while Perplexity cites a balanced mix of fresh and moderately aged URLs. OpenAI shows the most resilience across both enabled and disabled search conditions.

Additional freshness data reinforces this trend:

Nearly 65% of AI bot hits target content published in just the past year

Approximately 31% of citations from ChatGPT are from 2025

AI search engines prioritize domain-specific, well-structured content over third-party sources

Content Age | AI Bot Hit Rate |

|---|---|

Past year (2025) | ~65% |

Past 2 years (2024-2025) | ~79% |

Past 3 years (2023-2025) | ~89% |

Older than 6 years | Only 6% |

Stale content can significantly impact AI visibility, leading to decreased search rankings and reduced traffic. Traditional CMS platforms often lack the capability to automatically refresh content, which creates an operational burden that most teams cannot sustain at scale.

Key takeaway: Without automated content refresh capabilities, maintaining AI search visibility requires manual effort that grows exponentially with content volume.

Structured Data & Entity Clarity: The New Ranking Factors

Structured data has become essential for AI search visibility. JSON-LD is a lightweight Linked Data format that is easy for humans to read and write, and easy for machines to parse and generate.

Entity-first content optimization focuses on creating content around specific entities - people, places, or things - to improve search engine visibility and relevance. Entities are the building blocks of search engines' understanding of the web, allowing them to connect related information and provide more accurate results.

The schema.org vocabulary can be used with many different encodings, including RDFa, Microdata, and JSON-LD. JSON-LD is the recommended format because it's easy to add to web pages without altering the HTML structure.

Here's what proper entity and schema implementation enables:

Featured snippets: By focusing on entities, content creators improve their chances of appearing in prominent search results

Rich results: Using structured data can enhance search results, increasing click-through rates

AI citation accuracy: Clear entity signals help LLMs understand and correctly attribute information

Why JSON-LD Beats Microdata for AI Parsing

"Google recommends using JSON-LD for structured data whenever possible."

JSON-LD's advantages for AI parsing include:

Can be embedded directly into HTML without affecting visual layout

Supported by Google, Bing, and Yahoo!

Easier to read, maintain, and validate than inline microdata

Enables complex entity relationships without cluttering markup

A GEO-native approach automatically generates and maintains this structured data across all content, while traditional CMS platforms require manual implementation or plugin dependencies that often create technical debt.

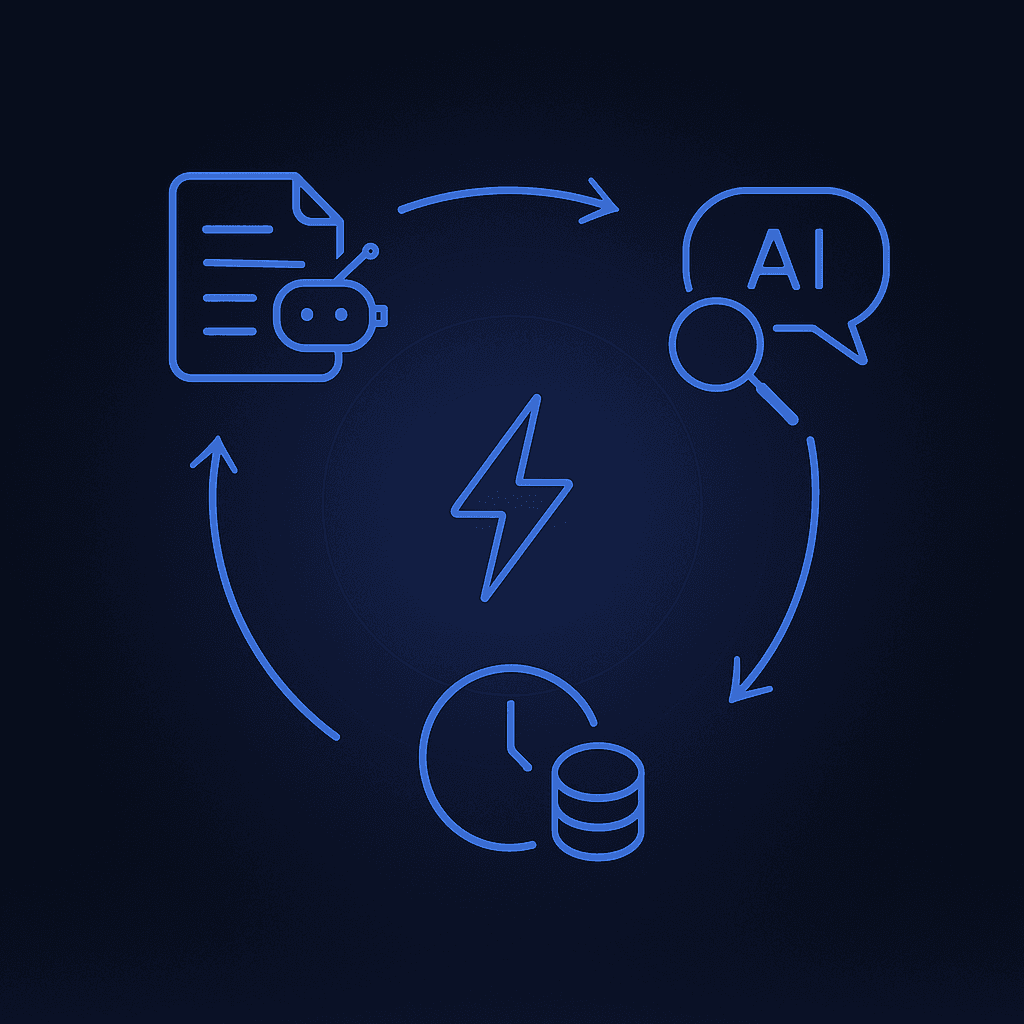

Agentic Automation: From Publishing Bottlenecks to Self-Refreshing Content

The shift from manual to autonomous content operations represents the core differentiator between traditional and GEO-native CMS platforms.

As McKinsey research emphasizes: "Value comes from end-to-end change. Broad productivity wins are table stakes. Impact comes from prioritizing the biggest growth problems and then solving them end to end in a domain."

GEO-native platforms like Relixir deploy AI agents that run on your CMS to generate, refresh, and optimize content cited by LLMs. This autonomous approach addresses three critical bottlenecks:

Content Generation: AI agents produce GEO-optimized content in minutes rather than days

Content Refresh: Autonomous systems continuously scan for outdated information and sync with knowledge bases

Citation Optimization: Every piece includes structural elements LLMs prioritize - factual snippets, statistics, FAQ sections, and JSON-LD schema

Relixir's Conversation to Content feature extracts intelligence from support chats, sales conversations, and customer calls to automatically transform it into SEO and GEO-optimized content. This creates a flywheel: more customer interactions generate more content, which drives more AI search visibility.

Visitor ID & Pipeline Impact

Driving AI search traffic only matters if you can convert that traffic into pipeline. Visitor identification becomes critical as AI referrals grow.

The state of visitor identification reveals a significant accuracy gap: "Most visitor ID solutions get identities right 5-30% of the time."

Relixir's platform addresses this through built-in visitor identification that tracks comprehensive metrics including AI Mention Rate, Citation Rate, Share of Voice, and Position Rankings.

Metric | Traditional Approach | GEO-Native Platform |

|---|---|---|

Person-level ID accuracy | 5-30% | 2-7x more accurate |

Company-level identification | Baseline | 40% higher rates |

AI attribution | Manual tracking | Automatic by engine |

Lead conversion rate | Standard | Up to 20x higher |

Testimonials demonstrate the pipeline impact. As one Relixir customer reported: "We moved from 5th to 1st position in AI rankings and saw 38.85% monthly growth in leads from AI." (Relixir.ai)

Migrating from WordPress or Webflow: Debt Reduction or Visibility Drain?

Migration decisions require careful consideration of both technical debt and AI visibility implications.

"Migrating from WordPress to Webflow isn't just a platform change, it's a chance to remove years of accumulated technical debt." Technical debt is anything that makes change risky, slow, expensive, or dependent on specific people.

However, a SearchOne.ai analysis of 2,084 startups reveals concerning data: "Companies using website builders have 40% fewer HTML elements on average, making their content significantly harder for AI systems to understand and process."

Additional findings:

23% use structured data vs 67% for custom sites

52% reduction in processing success for builder sites

46.2% of YC/a16z startups rely on website builders, sacrificing AI visibility

When migration does make sense, results can be significant. One case study of a migration from WordPress to Webflow showed: "Within 9 months following the website migration, compared to the 9 months prior, our client achieved remarkable improvements across key metrics: Pageviews increased by 34%, Landing Page Sessions grew by 26%, Average Time on Page rose from 42 seconds to 83 seconds, Google Clicks jumped from 22k/month to 25.3k/month, Conversion Events increased by 10.4%."

The key question isn't which traditional CMS to choose, but whether any traditional platform can deliver the AI visibility your business requires.

When Should You Adopt a GEO-Native CMS?

The decision to adopt a GEO-native CMS depends on several factors related to your AI search strategy and content operations.

Native GEO solutions have a first-mover advantage. Of the 8 GEO companies with the most momentum - each posting more than the market's average 14% Mosaic growth in the past year - 7 were founded between 2023-2025.

Additionally, McKinsey projects that "Agentic AI will power more than 60 percent of the increased value that AI is expected to generate from deployments in marketing and sales."

Consider a GEO-native CMS if:

Your competitors are gaining AI visibility: Analytics show rivals appearing in ChatGPT, Perplexity, or Google AI Overviews for your target queries

Content freshness is slipping: Your team cannot maintain the update cadence AI engines require

Manual processes don't scale: Publishing, refreshing, and optimizing content consumes unsustainable resources

AI traffic is growing but unconverted: You're seeing AI referrals but can't identify or convert those visitors

Technical debt limits agility: Schema implementation, structured data, and integrations require engineering resources you don't have

Four capabilities have emerged as table stakes for vendors, and platforms without this full stack will fall behind:

Multi-platform monitoring (ChatGPT, Perplexity, Claude, Gemini, Google AI Overviews)

Actionable insights with automated content recommendations

Native CMS integration or full CMS functionality

Automated content generation and refresh

Relixir provides all four capabilities as a GEO-native CMS built specifically for AI search. The platform delivers measurable results within 30 days, with customers consistently achieving 3-5x increase in AI search mention rate within 2-4 weeks of deployment.

Key Takeaways: Future-Proofing Content for AI Discovery

The architectural divide between traditional and GEO-native CMS platforms reflects a fundamental shift in how content gets discovered and consumed.

Traditional CMS platforms were built for 2000s-era SEO, requiring manual content publishing, manual content refresh cycles, and providing zero visibility into whether brands appear in AI search results. This architecture cannot scale to meet the demands of AI search.

GEO-native platforms solve this through autonomous content generation, continuous refresh capabilities, and built-in AI visibility monitoring. Relixir-generated blogs get cited 3x more often in AI search than traditional blogs.

The window to dominate AI-search-driven revenue is open now. LLMs increasingly pull from domain-specific content over third-party sources. Your blog is becoming the citation engine for AI search.

Action steps:

Audit your current AI search visibility across ChatGPT, Perplexity, and Google AI Overviews

Assess content freshness - identify pages older than 90 days that haven't been updated

Evaluate structured data implementation and entity clarity

Calculate the manual effort required to maintain AI visibility at your current content volume

Consider platforms like Relixir that provide end-to-end GEO capabilities

Companies that establish AI search visibility today will have a significant competitive advantage as the shift from traditional search to AI search accelerates. The architecture you choose determines whether your brand gets cited - or ignored.

What is a GEO-native CMS?

A GEO-native CMS is a content platform architected for AI search. Unlike WordPress or Webflow - which were built for human-first browsing and manual SEO - a GEO-native system embeds AI agents that autonomously generate, structure, and refresh content collections so large-language models can crawl, understand, and cite them. It pairs headless delivery with built-in JSON-LD, entity tagging, and freshness monitoring - removing the publishing bottlenecks that keep traditional sites invisible to ChatGPT, Perplexity, or Google AI Overviews.

Why do traditional CMSs struggle to rank in AI search?

Legacy CMS platforms were tuned for 2000s-era SEO. They rely on manual publishing, store content in page-level silos, and expose few structured signals. AI engines now favor recency, fact-level chunks, and schema-rich pages. Studies show URLs updated within 30 days are 2.5x more likely to be cited, while builder sites with 40% fewer elements see 52% lower AI processing success. Without automated refresh, schema, and entity clarity, WordPress or Webflow sites simply lack the data LLMs need to trust - and quote - your brand.

Frequently Asked Questions

What is a GEO-native CMS?

A GEO-native CMS is a content platform designed for AI search, embedding AI agents to autonomously generate, structure, and refresh content for large-language models to crawl, understand, and cite.

Why do traditional CMSs struggle to rank in AI search?

Traditional CMSs, built for 2000s-era SEO, rely on manual publishing and lack structured signals. AI engines favor recency, fact-level chunks, and schema-rich pages, which traditional platforms often do not provide.

How does content freshness impact AI search visibility?

Content freshness is crucial for AI search visibility. URLs updated within 30 days are 2.5 times more likely to be cited by AI engines, as they prioritize recent and relevant information.

What are the benefits of a headless CMS architecture?

Headless CMS architecture decouples content management from presentation, allowing seamless delivery across multiple platforms, enhancing scalability, and future-proofing content for AI and emerging technologies.

How does Relixir's GEO-native CMS improve AI search visibility?

Relixir's GEO-native CMS uses AI agents for autonomous content generation and refresh, ensuring content is optimized for AI citation with built-in monitoring and structured data, significantly improving AI search visibility.

Sources

https://searchatlas.com/research/url-freshness-in-llm-generated-answers/

https://relixir.ai/blog/best-geo-platforms-with-cms-integrations

https://frase.io/blog/what-is-generative-engine-optimization-geo

https://akselera.tech/en/insights/guides/geo-generative-engine-optimization-guide

https://relixir.ai/blog/relixir-vs-profound-vs-athenahq-geo-platform-comparison-2025

https://www.forrester.com/blogs/announcing-the-cms-wave-q3-2023/

https://searchatlas.com/blog/url-freshness-in-llm-responses-search-enabled-vs-disabled-analysis/

https://thedigitalbloom.com/learn/2025-ai-citation-llm-visibility-report/

https://vladimirsiedykh.com/blog/json-ld-schema-complete-guide-rich-snippets-seo

https://searchengineland.com/guide/entity-first-content-optimization

https://customers.ai/state-of-website-visitor-identification

https://www.broworks.net/blog/brands-migrate-from-wordpress-to-webflow-to-reduce-technical-debt

https://searchone.ai/reports/silicon-valley-website-builders

https://www.searchcentral.io/migrating-3500-urls-to-webflow-case-study

https://www.cbinsights.com/research/geo-companies-winning-ai-search/