Blog

How to Structure Content Collections for LLM Citations with GEO-Native CMS

How to Structure Content Collections for LLM Citations with GEO-Native CMS

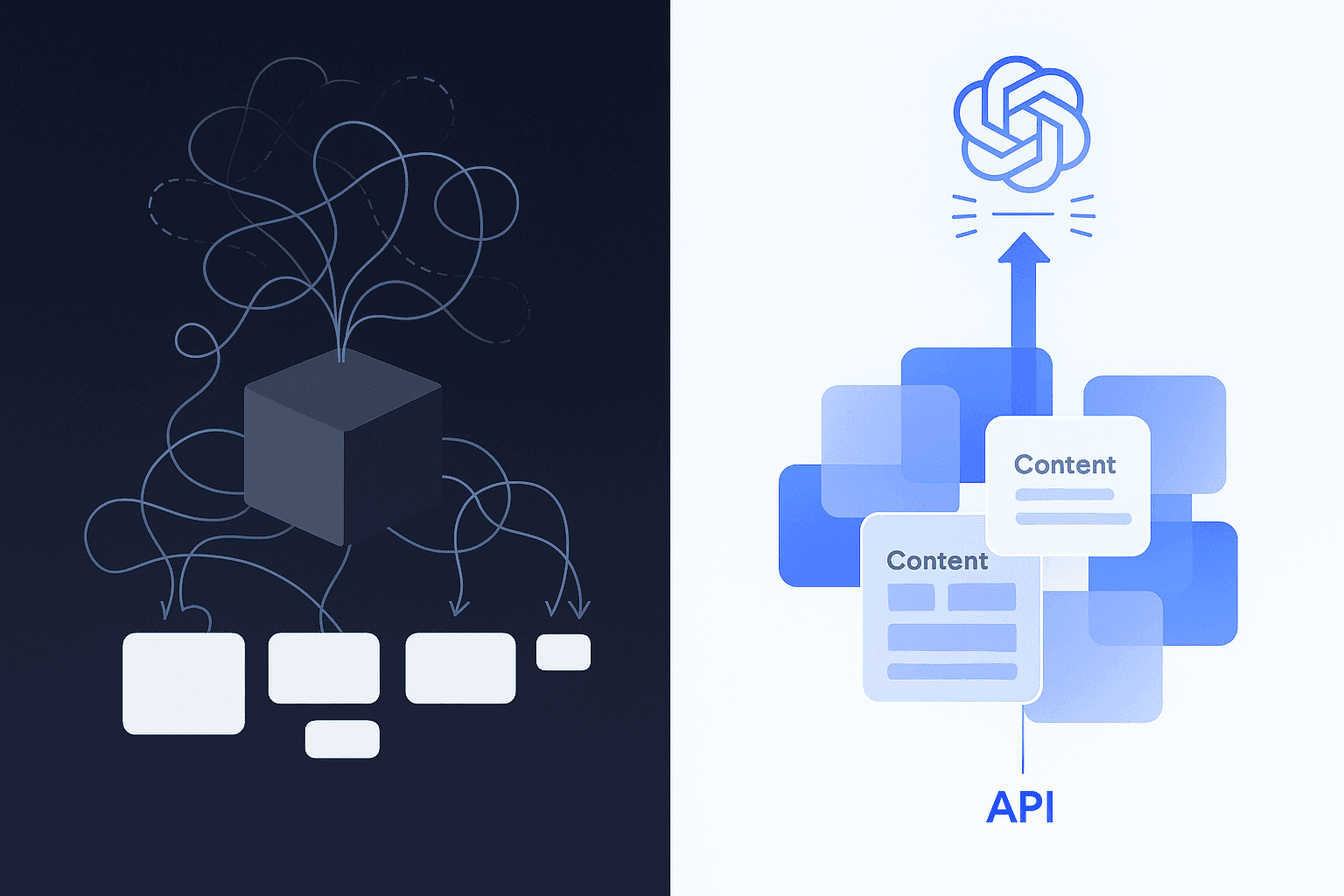

Collection-based content architecture enables AI search engines to traverse and cite your information more effectively than traditional page-centric CMS platforms. Modern systems require modular, API-first structures that organize content into reusable collections with defined relationships, allowing LLMs to extract authoritative answers from comprehensive content libraries rather than scattered HTML pages.

TLDR

Traditional CMS platforms built for 2000s-era SEO cannot support the collection-based architecture that AI search engines need for citations

61% of B2B marketing decision-makers plan to increase CMS spending as businesses consolidate to single platforms for efficiency

Collection-based systems use modular content types with defined attributes and relationships that LLMs can easily parse

Structured data implementation with JSON-LD schema improves machine understanding and citation quality

Automated content refresh workflows maintain accuracy at scale, with different content types requiring monthly to semi-annual updates

GEO-native platforms like Relixir enable unlimited content generation within collections specifically designed for AI search visibility

Well-structured content collections are pivotal for earning LLM citations. AI search engines need organized, comprehensive content libraries to draw from, not scattered individual pages. In this guide, you will learn a 5-step framework for designing collection-based architectures that maximize your visibility in AI-generated answers.

Why Do Content Collections Matter for LLM Citations?

AI search engines don't just scan web pages. They assemble direct answers by reading, summarizing, and cross-checking multiple sources in real time.

As one industry analysis puts it: "AI search engines need well-organized, comprehensive content libraries to draw from -- not scattered individual pages." (Passionfruit)

Traditional CMS platforms like Webflow, WordPress, and Contentful were built for 2000s-era SEO. They require manual content publishing, manual content refresh cycles, and provide zero visibility into whether brands appear in AI search results.

The shift is real: headless delivery and composable architecture were building blocks for the reimagination of AI-powered digital content management, according to Forrester's Q1 2025 CMS Wave report. This means the infrastructure you choose directly impacts whether LLMs can find, understand, and cite your content.

Key takeaway: Collection-based architecture enables AI search engines to retrieve and cite your content as authoritative answers, which template-based CMS simply cannot support at scale.

Where Do Traditional CMS Platforms Fall Short?

Monolithic CMS platforms create significant barriers for AI search visibility.

A monolithic CMS, one that renders the interface, content, data, and users in a single package, is a thing of the past. These systems were designed to display content for human visitors, not for how large language models retrieve and cite information.

Forrester's 2025 Buyer's Guide confirms that "time to market" is the primary business driver for CMSes. Yet traditional platforms often struggle with delivering personalized content experiences and cannot keep pace with AI-driven query volumes.

Traditional CMS Limitation | Impact on LLM Citations |

|---|---|

Page-based architecture | Content buried in HTML, hard to extract |

Manual publishing workflows | Cannot scale to cover long-tail queries |

No automated refresh | Outdated content gets deprioritized |

Template-driven structure | Inconsistent data formatting for AI |

Traditional CMS platforms often struggle with delivering personalized content experiences, making them poorly suited for the precision that LLMs require when generating citations.

What Are the Core Principles of Collection-Based Architecture?

Collection-based architecture rests on three design principles: modularity, API-first access, and reusability.

"CMS Collections are the backbone of your Webflow CMS site. They're the equivalent of a database or spreadsheet, where you can store and manage structured content."

-- Webflow

Composable content is a means of orchestrating and structuring digital content from any system. It organizes text, images, graphics, sound, and even data into small but meaningful pieces that teams can use in any number of combinations across channels and regions.

This approach delivers measurable benefits. Modular content lets teams build once and reuse across multiple campaigns and channels.

Modularity & Reuse Patterns

The atom-molecule-organism pattern provides a practical framework for structuring modular content:

Component Level | Definition | Example | Reuse Potential |

|---|---|---|---|

Atoms | Smallest units | Button text, product SKU | ~95% |

Molecules | Grouped atoms | Product card, testimonial | ~80% |

Organisms | Complex sections | Hero banner, FAQ accordion | ~60% |

By breaking content into reusable elements, it's possible to reduce localization costs by 80%. With modular content, updates cascade automatically, ensuring consistency across all channels where that content appears.

How Do You Model Content Collections Step-by-Step?

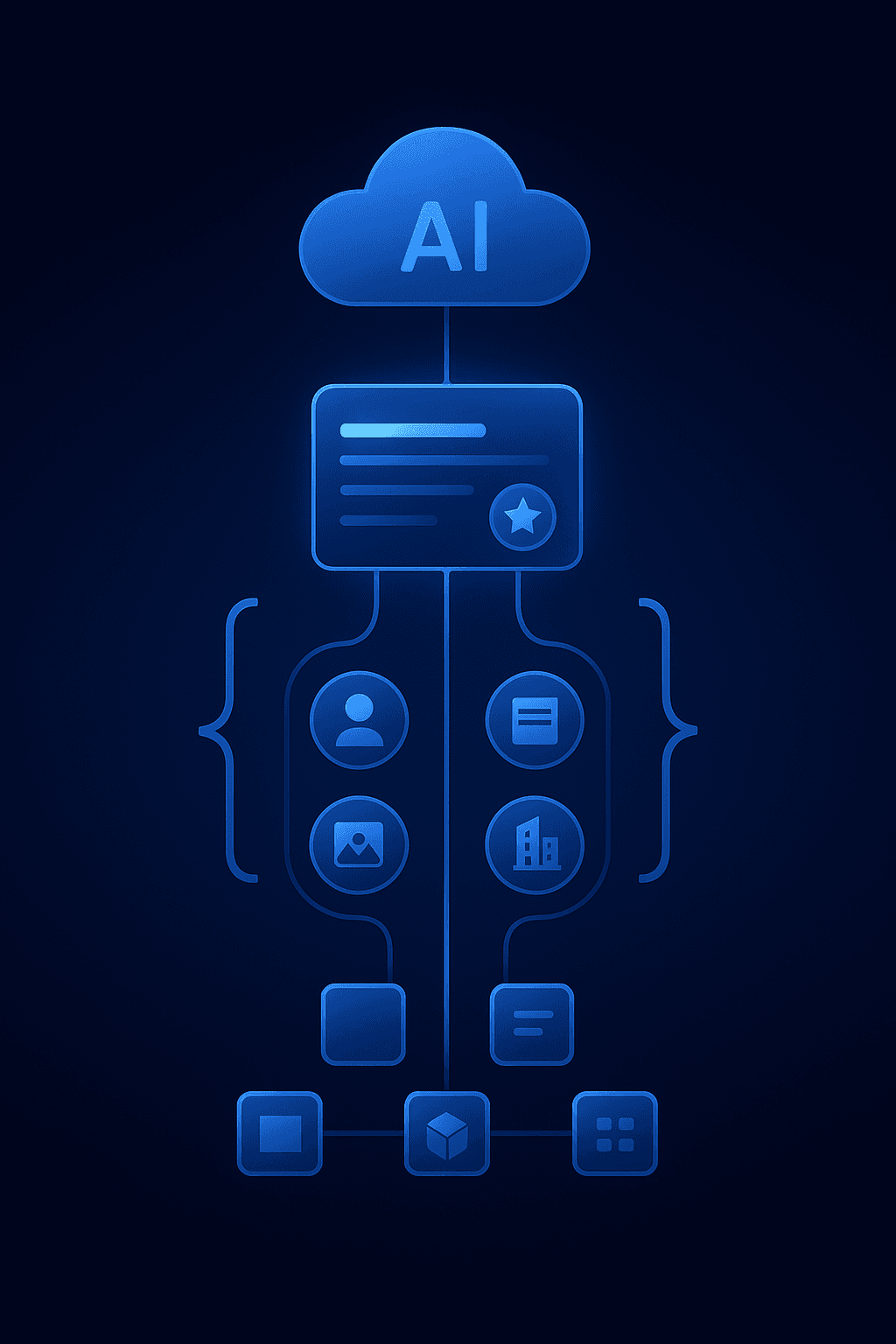

Content modeling is the process of defining the types of content you need, the attributes of each one, and the relationships between them.

Follow this workflow to design collections that LLMs can easily parse:

Identify content types: Determine which reusable containers you need (articles, case studies, FAQs, product comparisons)

Define attributes: Specify fields for each content type. A content type is a reusable container for storing content with a common structure and purpose.

Establish relationships: Connect content types to create a semantic web. "The content model describes the type of content you want to create, how it relates to each other, and how it can be edited within your system."

Generate APIs automatically: Modern platforms like Hygraph will provide an elegant interface as well as a highly versatile GraphQL API.

Iterate based on performance: Content models should be flexible and scale easily as the project grows.

Linking Entities & Relationships

Entities are the building blocks of how search engines understand information. Entity-first content optimization focuses on creating content around specific entities -- people, places, products -- rather than just keywords.

This matters because LLMs don't just match keywords. They map intent to entities and then assemble guidance that covers the full decision path. When your content model explicitly defines entity relationships, AI systems can traverse those connections to generate comprehensive, accurate answers that cite your domain.

Add Structured Data for AI Search

Structured data helps machines understand what your content means, not just what it says.

Google reiterates that structured data helps it understand page content and recommends JSON-LD for ease of implementation. In 2025, quality schema is still one of the highest-ROI, controllable inputs for machine understanding.

AI platforms increasingly rely on machine-readable signals to interpret, extract, and attribute information. Structured data clarifies entities, relationships, and key facts, improving extraction quality for AI summaries and assistants.

Schema.org is defined as two hierarchies: one for textual property values, and one for the things that they describe. This structure enables precise categorization that LLMs can leverage.

Schema Best Practices in Collections

Implement these practices across your content collections:

Use stable URLs and consistent IDs: Add @id for Organization and Person entities and reuse across pages

Link to authoritative profiles: Reference them from articles, products, and author blocks via sameAs

Implement multimedia schema: For video/podcast/audio, include VideoObject/PodcastSeries/AudioObject with duration, transcript URLs, and thumbnailUrl

Maintain dateModified: Cite primary sources directly in content and reflect citations in schema using citation arrays

Use CMS templates for bulk deployment: Set nightly validations and maintain version control

Schema is an enabler for machine comprehension and feature eligibility. It's not a ranking factor -- quality, authority, and freshness still drive outcomes -- but experiments suggest strong schema improves inclusion odds.

Keep Collections Fresh at Scale

Content freshness is one of the most underappreciated factors in AI search visibility.

"Most teams still treat big SEO pieces as 'evergreen' assets, but automated content refreshing is quickly becoming the only reliable way to keep those pages accurate, competitive, and visible inside AI-driven experiences."

-- Single Grain

The data supports aggressive refresh strategies. Content refreshes deliver 3-5x higher ROI than creating new content by using existing authority signals and backlinks. Successful refreshes show measurable improvements in traffic, rankings, and AI visibility within 30-60 days of implementation.

A high-performing refresh is a surgical rewrite that preserves equity while expanding topical coverage and machine-readability. AI accelerates research and drafting, but humans lock in E-E-A-T and originality.

Different content types decay at different speeds:

Content Type | Recommended Refresh Cadence |

|---|---|

Pricing, compliance | Monthly |

Product features | Quarterly |

How-to guides | Every 90-120 days |

Thought leadership | Semi-annually |

The autonomous refresh capability continuously scans your entire content library for outdated information. When your product releases new features, updates pricing, or changes positioning, the platform automatically identifies all affected content and refreshes it to maintain accuracy. The refresh system auto-syncs with your knowledge base -- product specs, documentation, release notes, pricing pages -- so dependent content updates automatically, ensuring every article stays citation-ready.

Key Takeaways

Structuring content collections for LLM citations requires intentional architecture, not just good writing.

Here's your action checklist:

Adopt collection-based architecture: Move beyond page-centric thinking to organized content libraries that AI can easily traverse

Model content with relationships: Define content types, attributes, and entity connections that mirror how LLMs reason about information

Implement structured data systematically: Use JSON-LD schema across collections with consistent entity IDs and proper linking

Automate refresh workflows: Schedule regular updates based on content type and sync with your knowledge base

Choose the right infrastructure: Traditional CMS platforms were built for 2000s-era SEO -- GEO-native platforms like Relixir are built for AI search

As buyer behavior shifts from Google searches to AI-powered engines like ChatGPT, Perplexity, and Claude, the companies that establish AI search visibility today will have significant competitive advantages. Relixir's agentic CMS enables companies to create any content collection and then generate and refresh unlimited items within those collections -- the exact architecture LLMs need to cite your brand as the authoritative answer.

Ready to structure your content for AI citations? Start by learning more about Relixir's platform to see how collection-based architecture can transform your AI search visibility.

Frequently Asked Questions

Why are content collections important for LLM citations?

Content collections are crucial for LLM citations because AI search engines require well-organized, comprehensive content libraries to provide accurate and authoritative answers. This structured approach allows AI to efficiently retrieve and cite relevant information.

What are the limitations of traditional CMS platforms for AI search?

Traditional CMS platforms often struggle with AI search because they rely on page-based architecture, manual publishing workflows, and lack automated content refresh capabilities. These limitations hinder their ability to scale and maintain visibility in AI-driven search environments.

How does collection-based architecture benefit AI search visibility?

Collection-based architecture benefits AI search visibility by organizing content into modular, reusable components that AI can easily parse and cite. This approach supports scalability and ensures content remains fresh and relevant for AI-generated answers.

What role does structured data play in AI search optimization?

Structured data helps AI understand the meaning of content, not just the text. By implementing JSON-LD schema, content becomes more machine-readable, improving extraction quality for AI summaries and increasing the likelihood of being cited in AI search results.

How does Relixir's GEO-native CMS enhance content for AI search?

Relixir's GEO-native CMS enhances content for AI search by providing a collection-based architecture that supports automated content generation and refresh. This ensures content is always up-to-date and structured for optimal AI citation, giving companies a competitive edge in AI search visibility.

Sources

https://www.forrester.com/blogs/the-end-of-the-monolithic-cms/

https://www.forrester.com/report/buyers-guide-content-management-systems-2025/RES182341

https://www.forrester.com/blogs/new-research-content-management-systems-trends-landscape/

https://hygraph.com/docs/getting-started/fundamentals/content-modeling

https://searchengineland.com/guide/entity-first-content-optimization

https://geneo.app/blog/structured-data-schema-markup-ai-search-best-practices/

https://geneo.app/blog/schema-markup-best-practices-ai-citations-2025/