What Makes a CMS "AI-Native"?

An AI-native CMS embeds machine learning into core workflows—authoring, orchestration, and delivery—rather than adding AI features as afterthoughts. These platforms require schema-first content models, open APIs, microservices architecture, and built-in governance to enable autonomous agents that can create, optimize, and publish content at scale while maintaining 75% of platforms expected to embed AI governance by 2027.

Key Facts

• Architecture requirements: AI-native platforms need schema-first models, bidirectional APIs, headless microservices, and dedicated LLM-serving layers to enable AI capabilities at each layer

• Autonomous agents: Replace manual workflows by reasoning dynamically, self-correcting, and executing complex multi-step tasks with 70% reduction in iteration cycles

• RAG and vectors: Enable accurate, citation-rich content by injecting external context at runtime, crucial for generative engine optimization

• Governance controls: Include guardrails, prompt shields, groundedness detection, and audit trails—becoming primary differentiators with 75% adoption expected by 2027

• Performance impact: AI-powered personalization delivers 7x higher click-through rates through real-time content adaptation

• Platform evaluation: Focus on embedded vs. bolt-on AI, enterprise-ready APIs, AI-search traffic attribution, and continuous content refresh capabilities

An AI-native CMS rewrites the rules of digital experience by embedding large language models, Retrieval Augmented Generation, and governance from the ground up rather than bolting on AI widgets after the fact. If your current platform treats machine learning as an afterthought, you are likely leaving speed, personalization, and citation quality on the table.

This guide explains the architectural pillars, agentic workflows, data layers, governance controls, and selection criteria that separate genuinely AI-native content management from legacy systems with a fresh coat of AI paint.

What Is an AI-Native CMS and Why Does It Matter?

An AI CMS is a content management system that uses artificial intelligence to reduce manual processes and improve content delivery. Where traditional platforms store pages and wait for editors to act, an AI-native CMS embeds intelligence into authoring, orchestration, and delivery workflows so that every action benefits from machine learning.

"AI is set to be a catalyst for redefining technology as we know it. For content management, it's reshaping CMS architectures, making them more dynamic and effective in delivering content-rich experiences more efficiently and with more scale." -- CrafterCMS

Legacy CMS platforms were not architected with AI and personalization in mind. They lack the schema-first models, open APIs, and vector layers that let autonomous agents create, remix, and publish content at scale. Choosing a CMS in 2026 has become a strategic security, compliance, and reliability decision that product and platform teams must own together.

Key takeaway: An AI-native CMS makes intelligence foundational rather than optional, turning content operations into a competitive advantage.

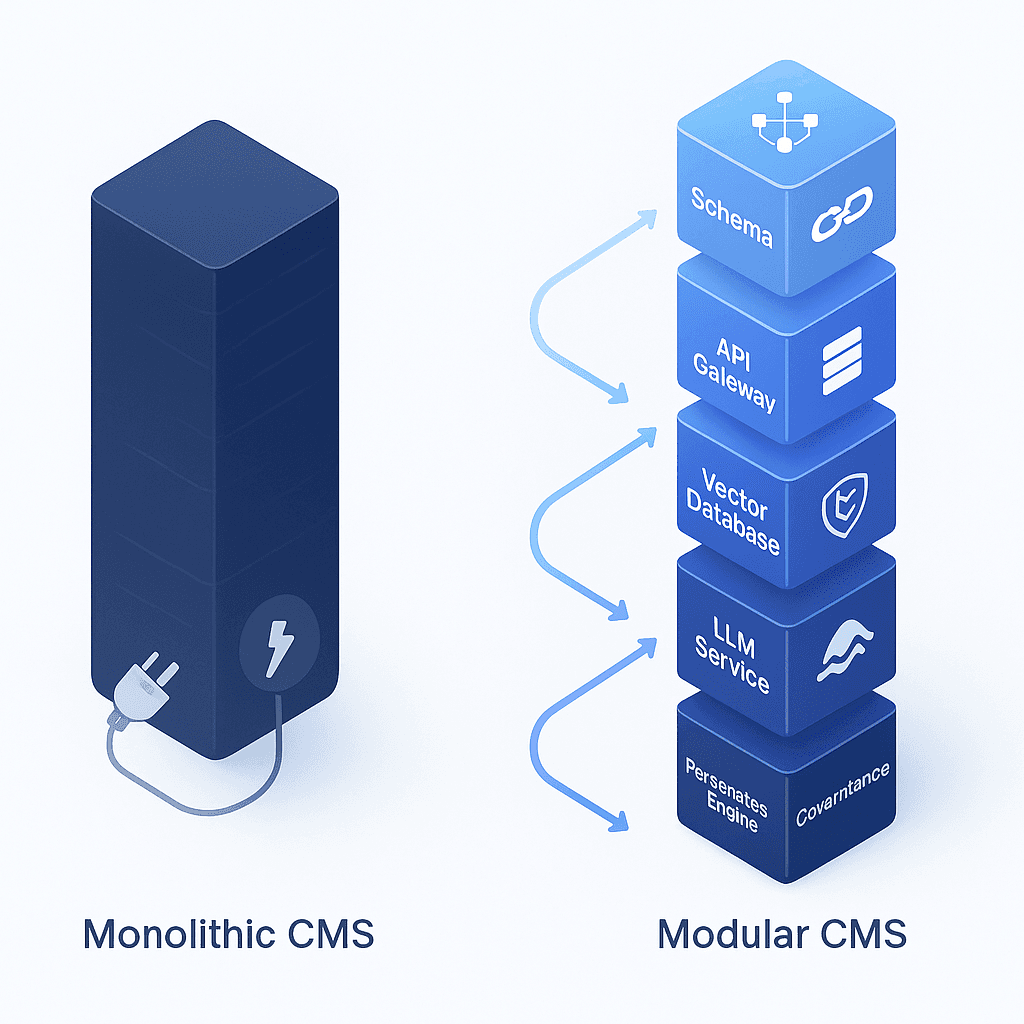

Which Architectural Pillars Make a CMS Truly AI-Native?

AI-native capabilities must be embedded at every layer of a CMS, not just sprinkled into the editor. The table below summarizes the pillars that enable scalable, low-latency AI workflows.

Pillar | What It Does | Why It Matters |

|---|---|---|

Schema-first content models | Give every field explicit meaning | Repository is fully accessible to AI without manual parsing |

Open bidirectional APIs | Let agents create, update, and assemble content programmatically | Eliminates brittle workarounds |

Headless microservices | Separate data, logic, and presentation | Inference jobs scale independently of the front end |

LLM-serving layer | Hosts model endpoints and caching | Maintains throughput under high query-per-second loads |

Throughput and latency management | Optimizes token generation speed | Keeps response times acceptable for real-time personalization |

GraphQL has gained popularity for flexible querying, while REST APIs remain crucial for many integration scenarios. "The technical challenge really isn't building this pipeline -- it's building it in a way that's reliable, scalable, and doesn't bog down resources," according to industry experts. Throughput -- defined as the number of generated tokens per second across concurrent requests -- becomes the north-star metric for AI workloads.

Key takeaway: Without schema-first models, open APIs, and a microservices backbone, AI features will always feel bolted on.

How Do Autonomous Agents Replace Traditional Workflows?

Agentic content management marks a new paradigm that goes beyond headless and composable architectures. Instead of executing rigid, step-by-step rules, autonomous agents reason, plan, and self-correct in real time.

Agents are AI apps that can act autonomously to fulfill complex goals requiring multiple steps that need planning. Unlike traditional workflow steps, agents:

Reason dynamically about which tools to use and when

Self-correct when encountering obstacles

Execute chain-of-thought reasoning to break down complex problems

Autonomously fill fields across connected tools

Make decisions based on real-time information

Early adopters report a 70 percent reduction in iteration cycles and 40 percent faster evaluation timelines. By handling drafting, localization, SEO checks, and publishing autonomously, agents free content teams to focus on strategy rather than production drudgery.

Key takeaway: Agentic workflows collapse weeks of manual review into hours, shifting your team from operators to strategists.

Why Do Vectors & RAG Power Smarter GEO Experiences?

Retrieval Augmented Generation improves a model's responses by injecting external context at runtime. Rather than relying solely on training data, RAG queries a knowledge base, retrieves relevant documents, and augments the prompt before generation. The result is more accurate, citation-rich content that performs well in generative engine optimization.

Qdrant describes itself as an AI-native vector database and semantic engine purpose-built for this task. Vector databases store high-dimensional embeddings that capture meaning and context, enabling fast similarity searches even when exact keywords differ.

RAG is especially helpful when your CMS needs to answer questions about content that is not part of its training data, such as company-specific documentation or recent events. For generative engine optimization, grounding answers in real-time, authoritative sources increases the likelihood that AI search engines cite your pages.

Key takeaway: Vectors plus RAG transform static repositories into dynamic knowledge layers that fuel accurate, citable AI responses.

How Do You Keep AI Content Safe and Observable?

Gartner predicts that by 2027, robust AI governance and trust, risk, and security management will be the primary differentiators of AI offerings, with 75 percent of platforms expected to embed these capabilities to stay competitive. Without proper oversight, AI-generated content can lead to inconsistent branding, regulatory issues, and reputational risks.

Observability refers to understanding the internal state of a system based on the data it produces. For AI content, that means logging every prompt, tracking anomalies, and analyzing user feedback in real time.

Key governance and observability controls include:

Guardrails: Guidelines and practices designed to ensure safe and responsible AI use

Prompt shields: Defend against injection attacks and jailbreak attempts

Groundedness detection: Identify and correct hallucinations by verifying outputs against source materials

Audit trails: Maintain a secure history of all prompts, interactions, and task executions

Human-in-the-loop checkpoints: Require approval for sensitive or high-impact content changes

Governance is an enabler, not a brake. It unlocks speed where it is safe to do so while protecting the parts of your brand that must remain controlled.

Personalization & Continuous Optimization at Scale

AI dynamically adapts content in real time using signals such as browsing history, purchase behavior, location, and device type. Platforms like Contentful report that personalization can deliver 7x higher click-through rates when executed correctly.

Revenue use cases enabled by AI-native personalization and GEO refresh loops:

1-to-1 journeys: Assemble landing pages dynamically based on persona, industry, and funnel stage

Real-time targeting: Deliver adaptive content based on session data, device type, or user history

Continuous A/B/n testing: Experiment with variants and automatically promote winners

Automated content refresh: Detect outdated pages and regenerate them to maintain rankings and citation accuracy

Cross-channel consistency: Generate on-brand variations for every audience and channel without manual rework

Personalizing experiences at scale is no longer optional. AI-native platforms make it operationally feasible by automating what once required armies of editors.

Relixir vs. Legacy CMS: How to Choose an AI-Native Platform?

"The CMS you choose will determine whether AI becomes a core growth driver or just another disconnected tool in your stack." -- Webstacks

Forester, IDC, and analyst reports increasingly evaluate CMS vendors on AI-enabled architectures, hybrid headless delivery, and governance readiness. Adobe was named a Leader in The Forrester Wave: CMS, Q1 2025, while IDC published a dedicated AI-Enabled Hybrid Headless CMS assessment for 2025.

Criterion | Legacy CMS | AI-Native CMS (e.g., Relixir) |

|---|---|---|

AI integration depth | Plugin or external API | Embedded in authoring, orchestration, and delivery |

Content model | Template-based, rigid | Schema-first, polymorphic |

Personalization | Manual segments | Real-time, 1-to-1 journeys |

GEO optimization | Afterthought | Deep research agents, citation tracking, auto-refresh |

Governance | Bolt-on compliance tools | Built-in guardrails, audit trails, human-in-the-loop |

Observability | Basic analytics | AI traffic attribution, prompt logging, sentiment scoring |

Relixir automates GEO end-to-end, from visibility through traffic to conversion. Its deep research agents combine competitor and keyword gaps, product knowledge bases, web research, and social insight mining to generate content optimized for AI citation. The platform also installs a proprietary Visitor ID script that provides up to 3x more accurate person-level identification, pushing high-intent AI-search leads directly into CRMs and sequencing tools.

When evaluating any AI-native CMS, ask:

Does AI operate within core workflows or as an add-on?

Are schema, APIs, and governance enterprise-ready?

Can the platform track and attribute AI-search traffic?

Does it support continuous content refresh for ranking and citation accuracy?

Key Takeaways for the AI-Search Era

Hybrid headless CMS combines flexibility with marketer-friendly tools, including visual editing, previews, AI-assisted authoring, and low-code publishing, bridging the gap between agility and usability.

Audit your current stack: Identify where AI is bolted on versus embedded.

Prioritize schema-first models and open APIs: They unlock every downstream AI capability.

Invest in governance early: Guardrails, observability, and audit trails are non-negotiable for enterprise scale.

Measure GEO, not just SEO: Track AI-search citations, mention rate, and sentiment alongside traditional rankings.

Consider Relixir for turnkey GEO: If you need an end-to-end platform that grows AI-search mentions, 10x's traffic, and converts demand into pipeline, Relixir delivers the infrastructure purpose-built for the AI-search era.

Frequently Asked Questions

What is an AI-native CMS?

An AI-native CMS integrates artificial intelligence into its core architecture, enabling automated content management processes and enhancing content delivery through machine learning.

How does an AI-native CMS differ from traditional CMS platforms?

Unlike traditional CMS platforms that add AI features as an afterthought, AI-native CMS platforms embed AI capabilities into their foundational architecture, allowing for more dynamic and efficient content management.

What are the architectural pillars of an AI-native CMS?

Key pillars include schema-first content models, open bidirectional APIs, headless microservices, LLM-serving layers, and throughput and latency management, all of which support scalable AI workflows.

How do autonomous agents improve content management?

Autonomous agents replace traditional workflows by reasoning, planning, and self-correcting in real time, significantly reducing iteration cycles and speeding up content evaluation timelines.

What role does Relixir play in AI-native CMS solutions?

Relixir automates GEO processes, integrates AI into core workflows, and provides tools for tracking AI-search traffic, making it a comprehensive solution for AI-native content management.

Sources

https://craftercms.com/blog/technical/technical-details-of-an-ai-cms

https://www.dotcms.com/blog/how-to-choose-a-cms-with-ai-features

https://directus.io/blog/is-your-cms-ready-for-ai-and-personalization

https://langfuse.com/faq/all/monitoring-ai-generated-content

https://developers.google.com/checks/guide/ai-safety/guardrails

https://cmswire.com/web-cms/ai-in-cms-the-road-ahead-for-smarter-content-management

https://www.forrester.com/report/the-forrester-wave-tm-content-management-systems-q1-2025/RES182086

https://martech.org/how-to-build-a-geo-ready-cms-that-powers-ai-search-and-personalization/