Blog

ChatGPT not citing your blog? How AEO platforms fix citation issues

ChatGPT not citing your blog? How AEO platforms fix citation issues

ChatGPT and other LLMs fail to cite quality content because they fabricate references in over 50% of responses due to lacking structured data to ground their answers. AEO platforms fix this by implementing JSON-LD schema, entity disambiguation, and semantic signals that transform unstructured content into machine-readable knowledge LLMs trust.

TLDR

ChatGPT-4o generates false or mismatched citations in 51.8% of responses, while ChatGPT-3.5 fabricates references 42.9% of the time

AI citations have become critical as 69% of Google searches now result in zero clicks

Citation patterns change up to 50% monthly across AI platforms, requiring constant monitoring

AEO platforms achieve 22% to 80% citation success rates through systematic optimization

Structured data, FAQ schemas, and entity disambiguation are essential for AI recognition

DIY approaches often fail due to the complexity of tracking multiple AI engines with distinct preferences

Getting cited by an AI model like ChatGPT gives your content long-term visibility. Yet many marketers watch their carefully crafted content get ignored while competitors dominate AI responses. The problem? LLMs fabricate or mis-match references in over 50% of answers because they lack high-confidence, structured data to ground them.

This gap between content quality and AI recognition costs businesses real opportunities. As zero-click searches account for 69% of Google queries and AI-driven interfaces reshape discovery, missing citations means missing customers.

Why does ChatGPT ignore great content (and why does it matter)?

Getting cited by an AI model like ChatGPT gives your content long-term visibility. Being cited in an AI-generated answer has become the new Page One. Traditional SEO metrics assume a click-based economy, but AI agents now mediate information access. ChatGPT, Perplexity, and domain-specific bots serve as gatekeepers between your content and potential customers.

The business impact is stark. Generative AI chatbots drive 95-96% less referral traffic than traditional search results. When your content isn't cited, you lose both visibility and the limited traffic AI responses generate. Large Language Models don't parse your site like traditional crawlers -- they interpret data, schema, and signals to determine what information to trust and reference.

This shift fundamentally changes content economics. Unlike traditional search where multiple results compete for clicks, AI synthesizes information into single answers with minimal citations. Those few cited sources capture nearly all value from user queries.

Root causes of AI citation failures: bias, hallucination & data gaps

LLMs consistently fail at accurate citation, with ChatGPT-4o fabricating or mismatching references in 51.8% of responses. This isn't a minor glitch, it's a systemic issue rooted in how models process and retrieve information.

The hallucination problem stems from training data quality. While ChatGPT3.5 offered seemingly pertinent references, a large percentage proved to be fictitious. Even specialized models like ScholarGPT, designed for academic use, still produce high rates of fabricated citations compared to general-purpose models.

Bias compounds the problem. Research shows LLMs exhibit pronounced high citation bias, amplifying existing preferences like the Matthew effect. Models favor highly-cited sources, creating feedback loops that exclude newer or less prominent content regardless of quality.

Memory interference creates additional citation failures. When multiple sources share similar content, models struggle to disambiguate, leading to misattribution. Studies confirm that the hallucination of non-existent papers remains a major issue, particularly for content outside the model's core training distribution. As one report states: "Large Language Models don't parse your site like a browser or bot; they interpret data, schema, and signals."

How do you measure AI citation gaps with analytics dashboards?

Tracking AI citations requires new metrics beyond traditional SEO. The key measurement is citation volume, how often your content gets referenced across AI interfaces. Optiview's formula captures this: Visibility Score calculation = (Frequency × 0.5) + (Diversity × 0.3) + (Recency × 0.2).

Citation volatility makes consistent monitoring essential. Citation sets change up to 50% monthly, with minimal overlap between platforms. What ChatGPT cites today, Perplexity might ignore tomorrow. Each AI engine has distinct preferences, Gemini leans on websites while OpenAI models favor listings.

Beyond volume, measure citation diversity across domains. Single-source dominance signals vulnerability. Track entity recognition to confirm your brand encodes properly in model memory. Monitor schema coverage to ensure structured data signals topical expertise. These metrics reveal whether your content architecture supports or hinders AI discovery.

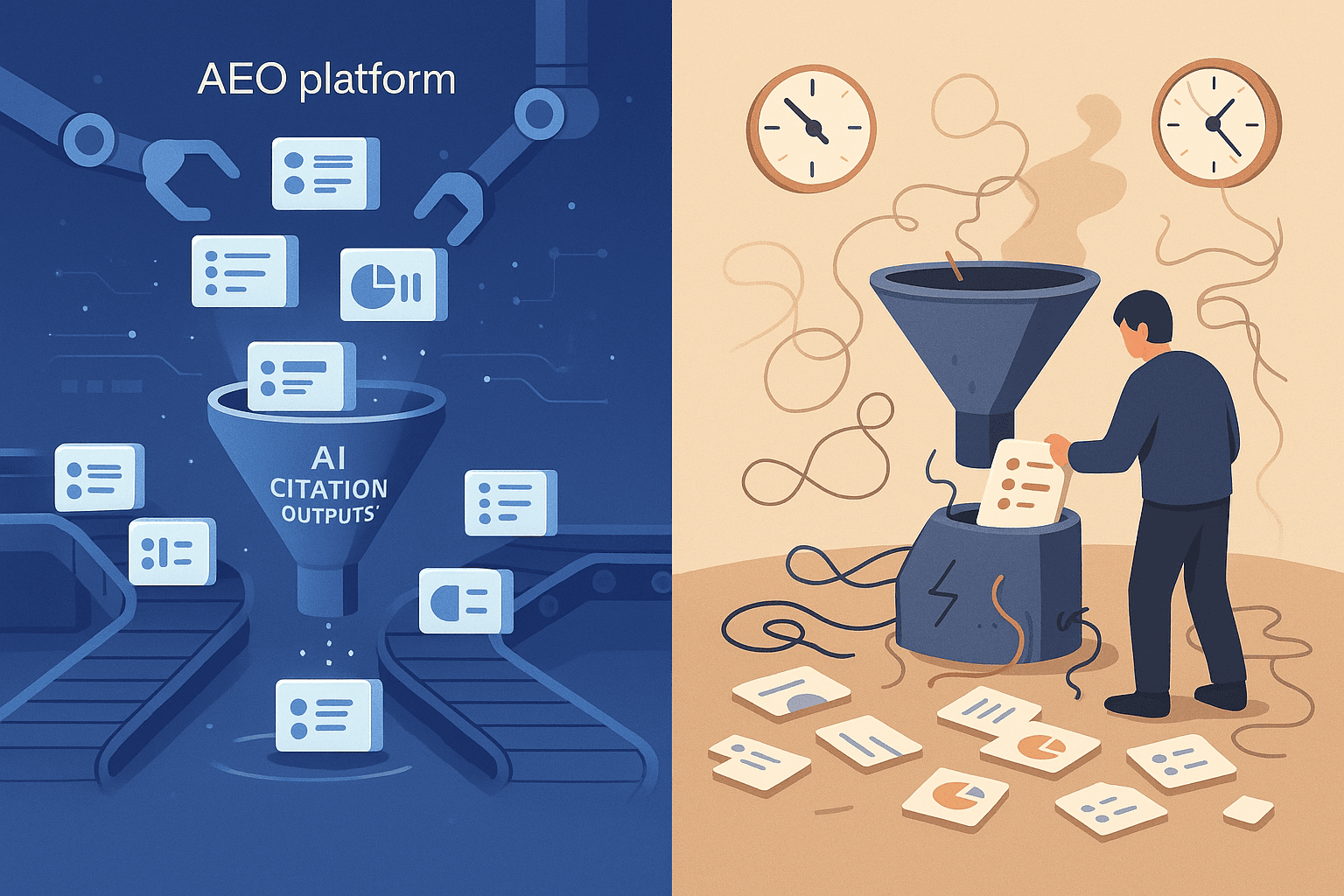

How AEO platforms repair citation pipelines

AEO platforms address citation failures through systematic optimization. They inject JSON-LD and FAQ schema so LLMs can confidently extract facts rather than fabricate them. This structured data transforms unstructured prose into retrievable knowledge that models trust.

Large Language Models don't parse sites like browsers, they interpret signals. Platforms engineer these signals through entity disambiguation, ensuring your brand and content can't be confused with others. They implement semantic embeddings that align with common query patterns, increasing retrieval probability.

Citation engineering goes beyond technical fixes. As Growth Marshal defines it, it's the art of making content irresistible to LLMs through intentional structure, entity clarity, and semantic relevance. Platforms automate this process, applying proven patterns across your entire content library rather than requiring manual optimization of individual pages.

How do you make your next post citation-ready?

Start with absolute clarity. Prove you're an expert through E-E-A-T, use detailed author bios, display update dates, cite authoritative sources, and share first-hand experience. This isn't about gaming algorithms; it's about providing verifiable signals of trustworthiness.

Structure content for machine readability. Schema markup makes information easier for bots to parse and surface. Implement Article, FAQ, and How-to schemas that label your content's purpose and structure. Frame headers as questions users actually ask, then answer directly in the first sentence.

This workflow automatically identifies citation opportunities by analyzing where competitors get referenced. Track their backlink profiles to find sites LLMs already trust, then pursue placements there. Build semantic networks through content clustering and internal linking, creating topical authority machines recognize.

Mini-case study: Recovering citations after a 50% drop

Growth Marshal worked with a SaaS company specializing in employee recognition software in Q1. Their citation volume had dropped 50% despite maintaining search rankings. The problem? Their blog lacked the structured signals LLMs require.

The team rebuilt content architecture using citation engineering principles. They added JSON-LD schema to every page, rewrote introductions for direct answers, and created entity definitions for their brand and products. Result: Perplexity success rate jumped from 22% to 80%.

Beyond technical changes, they analyzed citation patterns monthly. This revealed platform-specific preferences, ChatGPT favored their how-to content while Perplexity cited comparison pages. They adjusted content strategy accordingly, creating platform-optimized versions of key topics.

The broader lesson? Recovery requires both immediate technical fixes and ongoing monitoring. Studies show at least 13.5% of 2024 abstracts were processed with LLMs, with rates reaching 40% in some subcorpora. As AI-generated content saturates the web, only systematically optimized content breaks through the noise.

AEO platforms vs. DIY tactics: Which is more cost-effective?

DIY citation engineering seems appealing, add some schema, rewrite introductions, monitor manually. But the economics favor platforms. Traditional SEO metrics are relics of a dying regime. AI-native optimization requires continuous adaptation across multiple engines with distinct preferences.

Consider timeline realities. It can take weeks or months for new content to appear in AI citations. Manual monitoring across ChatGPT, Perplexity, Gemini, and others becomes a full-time job. Meanwhile, citation patterns shift monthly, requiring constant strategy adjustments.

Platform advantages compound over time. Legal departments using AI spend 75% less time per contract review. Similar efficiency gains apply to citation optimization. Platforms automate prompt simulation, track multi-engine performance, and identify gaps faster than manual processes.

Cost comparisons reveal the gap. Mid-tier paid solutions deliver 40-60% better ROI than cobbled-together free tools when total operational costs are calculated. The hidden expense of DIY, missed opportunities while you build systems, often exceeds platform fees.

For teams managing significant content libraries, the choice becomes clearer. SLMs provide 5× to 29× cost reduction compared to GPT-4 APIs. Platforms leverage similar efficiencies, spreading development costs across customers while you focus on content creation.

Key takeaways: Engineering content for the AI search era

The citation crisis isn't temporary. As AI interfaces dominate discovery, use clear, scannable headings becomes survival advice. Every piece of content needs machine-readable structure, verifiable facts, and trust signals LLMs recognize.

AEO platforms transform citation engineering from art to system. They monitor volatile citation patterns, implement proven optimization frameworks, and adapt to engine-specific preferences automatically. For businesses serious about AI visibility, platforms provide the infrastructure traditional SEO tools can't match.

The shift from clicks to citations rewrites digital marketing rules. Companies that systematically optimize for AI discovery capture limited citation slots while others remain invisible. As more businesses recognize this reality, citation competition will intensify.

Relixir helps over 200 B2B companies monitor and improve their AI search presence through automated GEO optimization, comprehensive citation tracking across all major AI engines, and deep research agents that generate citation-ready content. Rather than hoping for AI visibility, build the systematic approach that ensures it.

Frequently Asked Questions

Why does ChatGPT ignore my blog content?

ChatGPT may ignore your blog content due to a lack of structured data, schema, and signals that AI models use to determine trustworthiness and relevance. Without these, AI models might fabricate or misattribute citations.

How can AEO platforms help with AI citation issues?

AEO platforms optimize content for AI citation by injecting structured data like JSON-LD and FAQ schema, ensuring that AI models can confidently extract and reference facts from your content.

What are the root causes of AI citation failures?

AI citation failures often stem from issues like hallucination, bias, and data gaps. These occur when AI models fabricate references or favor highly-cited sources, excluding newer content.

How do you measure AI citation gaps?

AI citation gaps can be measured using metrics like citation volume, diversity, and recency. Tools like Optiview's Visibility Score help track how often and where your content is cited across AI interfaces.

What is the advantage of using AEO platforms over DIY tactics?

AEO platforms offer systematic optimization and continuous adaptation across multiple AI engines, providing better ROI and efficiency compared to manual DIY tactics, which can be time-consuming and less effective.

How does Relixir help improve AI search presence?

Relixir enhances AI search presence by automating GEO optimization, tracking citations across AI engines, and generating citation-ready content, ensuring systematic visibility and credibility in AI search results.

Sources

https://www.tandfonline.com/doi/full/10.1080/19322909.2025.2482093?src=exp-la

https://www.similarweb.com/blog/marketing/geo/ai-citation-analysis/

https://llmlogs.com/blog/how-to-write-content-that-gets-cited-by-chatgpt

https://www.growthmarshal.io/blog/citation-engineering-in-ai-responses

https://www.wpbeginner.com/beginners-guide/how-to-get-your-wordpress-content-cited-by-ai-tools/

https://www.getphound.com/how-can-i-make-my-content-more-likely-to-appear-in-chatgpt-answers

https://www.contractscounsel.com/b/ai-contract-review-cost-analysis